In 2026, the real AI problem is not model capability but weak infrastructure. Most enterprise AI implementation challenges happen due to poor data pipelines, lack of observability and fragile deployment systems, not because large language models (LLMs) are inaccurate. Organizations that invest only in better models without strengthening Enterprise AI infrastructure struggle to scale AI reliably. What fails is not intelligence, but the systems meant to support it.

The AI industry’s obsession with LLM benchmarks is fading fast, replaced by infrastructure mastery as the real key to success. In the United States, Big Tech’s massive CAPEX investments crossed $300 billion in 2025, with Amazon investing $100B, Microsoft $80B, and companies like Alphabet and Meta channeling capital into data centers, GPUs, and cloud networks rather than frontier models. This shift clearly signals where scalable AI value truly lies: data and compute infrastructure for AI, not model hype.

1. The New 80/20 Rule: Data Modernization is the Main Event

Many companies believe they must overhaul every bit of data before launching AI initiatives, but that’s a myth holding them back.

The new 80/20 rule flips this: modernizing just 20% of your most critical data pipelines unlocks 80% of the AI value at scale, letting you move fast without perfection.

Data scarcity and privacy rules have long been barriers, but synthetic data generation creating realistic fake datasets has become a trusted standard. For example, a bank can generate synthetic customer transaction data to train fraud detection models without exposing real account details, safely tapping “off-limits” sources.

Waiting for flawless organic data delays progress while competitors build resilient Enterprise AI infrastructure that compounds value over time.

2. The Shift to “Semantic Telemetry”

Static business intelligence dashboards were once the go-to for spotting trends after the fact, and now they are fading as companies demand speed in a real-time world. The shift to “Semantic Telemetry” replaces them with streaming sentiment analysis powered by LLMs, moving beyond simple keyword counts to deep, contextual understanding of customer emotions and intents.

This advanced approach pairs live data streams from chats, reviews, and social feeds with LLM-driven root cause extraction, pinpointing why sentiment dips such as a pricing glitch frustrating users in minutes rather than months.

Imagine an e-commerce platform: Traditional BI might show a sales drop a week later via aggregated metrics, but Semantic Telemetry detects rising “overpriced” sentiment in real-time chats, traces it to a dynamic pricing bug via LLM analysis, and alerts teams to fix it before churn spikes thus turning potential losses into instant wins. This is Enterprise AI infrastructure delivering operational agility, not just insight.

3. The Rise of True Agency (Beyond the Chatbot)

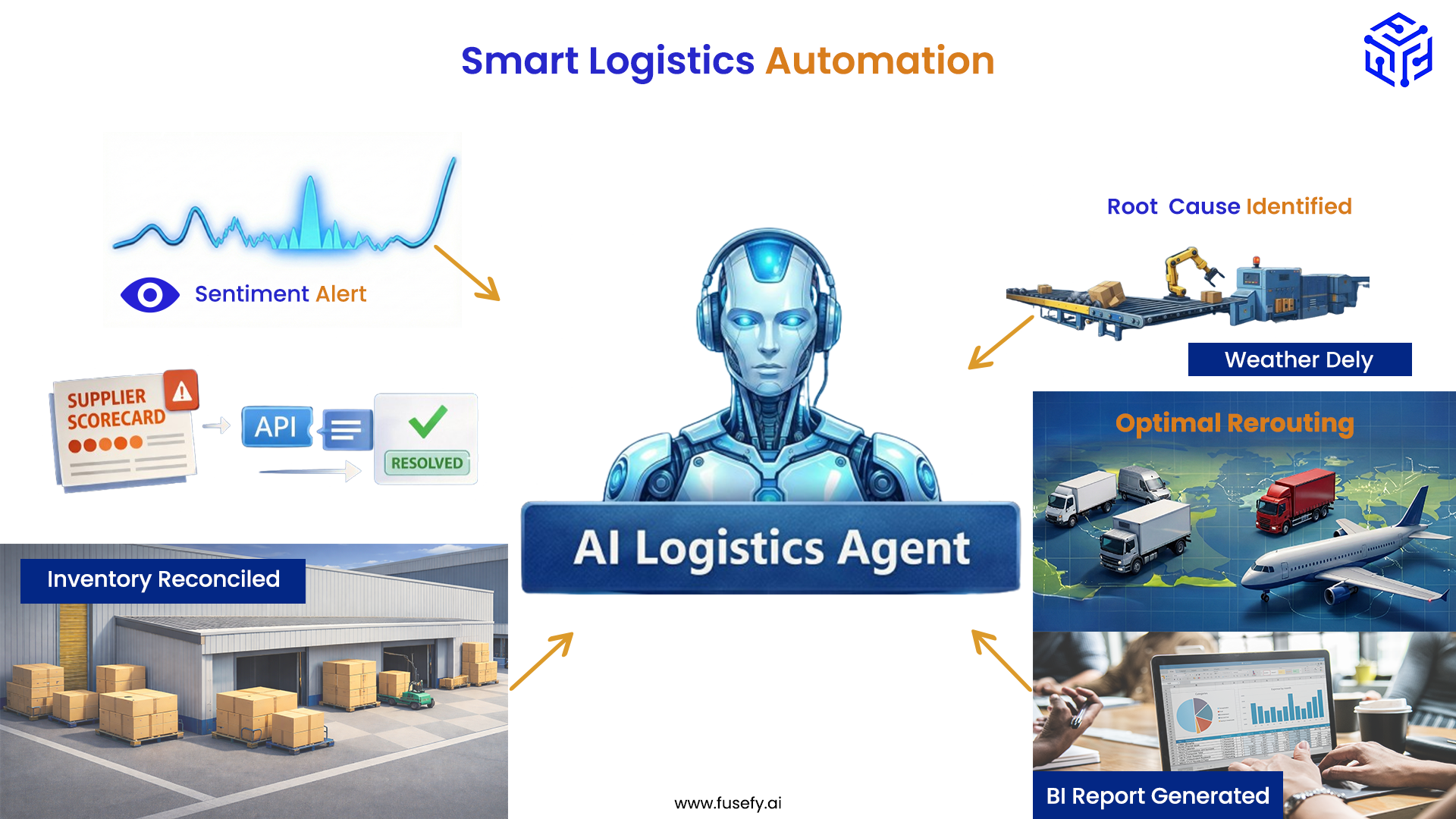

Alongside semantic telemetry’s real-time insights, the future of enterprise automation evolves from basic chatbots that merely summarize emails to sophisticated Agentic Workflows with autonomous agents wielding tools to orchestrate multi-step tasks without constant supervision.

These agents deliver massive time savings through targeted capabilities:

- autonomous ticket classification that instantly routes and prioritizes support issues;

- self-healing troubleshooting flows that diagnose and resolve IT glitches like network outages via API calls;

- supply chain reconciliation that cross-checks inventory discrepancies across vendors in seconds; and

- automated BI report generation that pulls live data, applies semantic analysis, and drafts executive summaries on demand.

True agency depends not on smarter prompts, but on robust data infrastructure for AI, reliable APIs, and observability baked into Enterprise AI infrastructure.

4. The “Invisible” Threat: Feature Drift Over Model Accuracy

To add to the agentic workflows’ seamless orchestration, a stealthier threat looms in 2025’s AI landscape: feature drift, where the environment evolves faster than models adapt, causing 90% of failures not from “dumber” LLMs, but shifting data realities.

RAG reigns supreme over retraining foundation models, yet it crumbles:

- when upstream changes like new product launches break retrieval assumptions,

- downstream customer behaviors subtly pivot (e.g., seasonal buying spikes),

- database schema tweaks silently alter fields, or

- “shady” pipelines introduce noisy data.

The fix lies in proactive Feature Drift Monitoring which involves real-time alerts on distribution shifts to safeguard reliability, ensuring RAG pipelines stay robust amid flux.

For an example, if a small behind-the-scenes change adds an “eco-friendly” label to products and as no one notices how this affects the data, the recommendation system misinterprets customer preferences and starts showing irrelevant items, causing fewer users to click.

However, monitoring systems catch the issue immediately. They detect the drop in performance and trigger a recalibration of the data pipeline, fixing the recommendations before sales are affected.

5. The “Shadow Mode” Standard: Clinical Pilots

Beyond the feature drift’s silent sabotage, smart organizations counter with the “Shadow Mode” standard: Clinical AI Pilots that ship 10x faster by prioritizing safety over perfection, swapping “no-fail” paranoia for unified pipelines merging feature flags, A/B testing, model gating, and shadow mode into one lifecycle.

This safety net slashes deployment risk by 70% and turbocharges iteration where agents run invisibly alongside live systems, validating outputs on real traffic without user impact before gating go-lives.

For example, a customer service agent tests upgraded intent detection in shadow, A/Bs holdout queries, flags regressions, and deploys weekly turning months of caution into rapid, reliable wins that keep pace with 2026’s infrastructure flywheel.

Conclusion

As 2026 nears, AI success is no longer about chasing better models ,it’s about solving the AI infrastructure problem.

AI Success = Data Modernization + Autonomous Agents + Rapid Pilots

Organizations that modernize critical data, monitor systems in real time, enable autonomous workflows, guard against feature drift, and test safely in shadow mode are the ones that make AI dependable at scale. These foundations turn AI from a fragile proof-of-concept into a capability that adapts, operates continuously, and delivers measurable business value. In short, the advantage in 2026 belongs to those who build resilient AI systems not just smarter models.